Linguistics of German Twitter

... a project by Tatjana Scheffler

Goal:

Access Twitter data for linguistic research

Posters/Slides

Using Twitter Data for (Linguistic) Research

How-To: Corpus Construction

Links and Resources

Posters and Slides (by TS & collaborators)

|

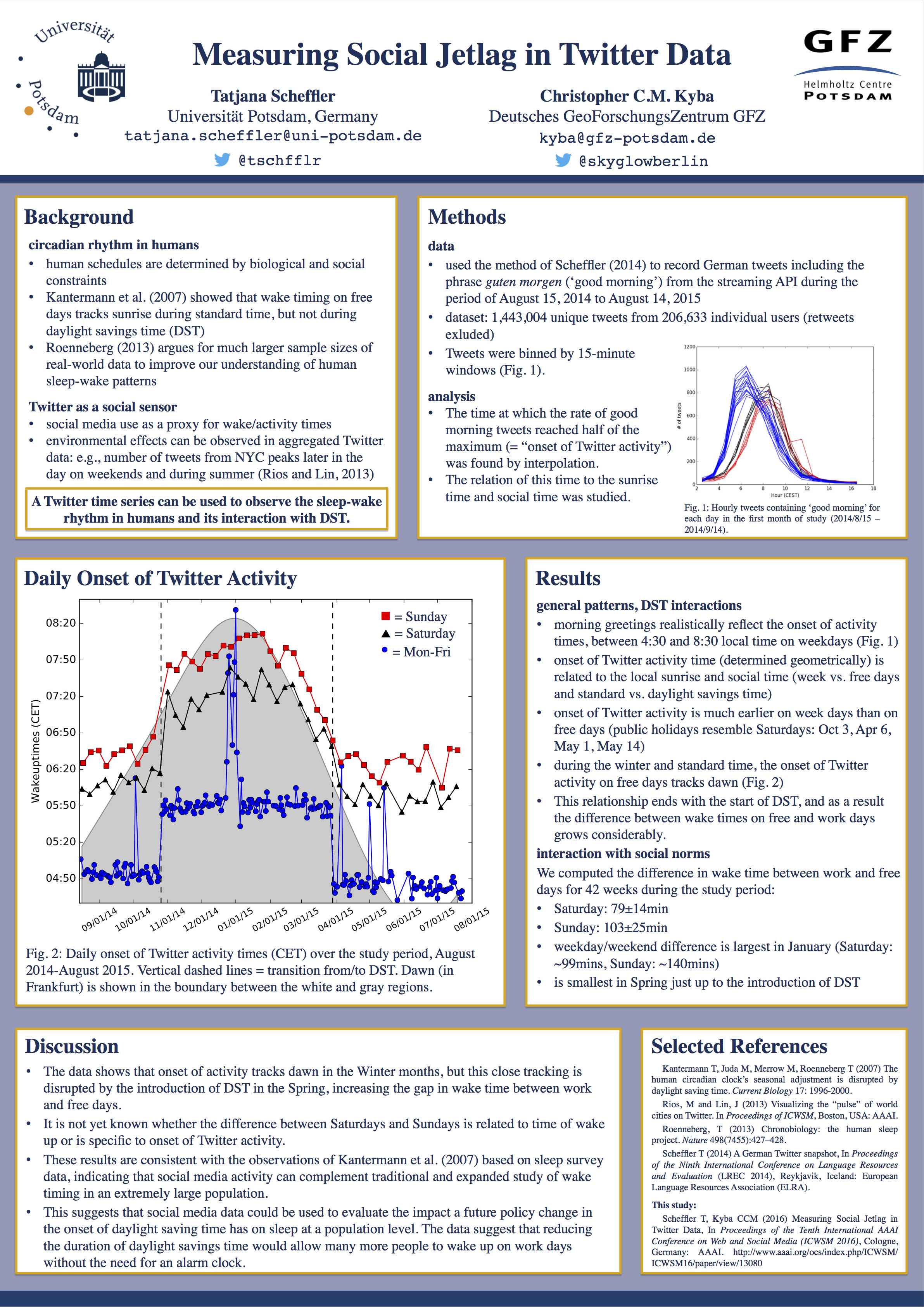

Measuring

Social Jetlag in Twitter Data. (with Christopher Kyba) Proceedings of the Tenth International AAAI Conference on Web and Social Media (ICWSM 2016), AAAI, Köln, Germany. 2016. |

|

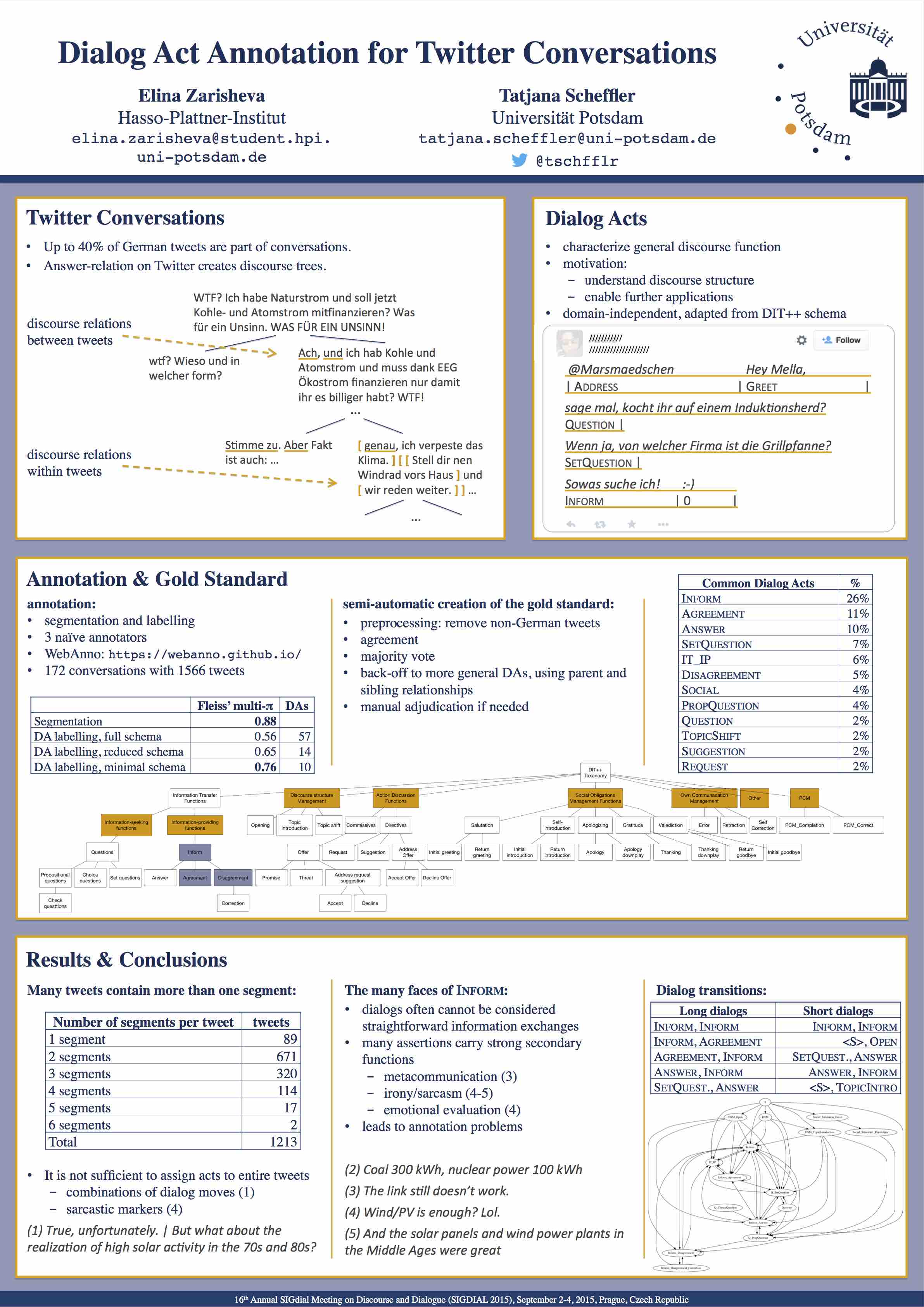

Dialog Act Recognition for Twitter Conversations. (with Elina

Zarisheva) Proceedings of the Workshop on Normalisation and Analysis of Social Media Texts (NormSoMe), Portorož, Slovenia. 2016. |

|

Dialog act annotation for Twitter conversations. SigDial Conference, September 2, 2015, Prague, Czech Republic |

|

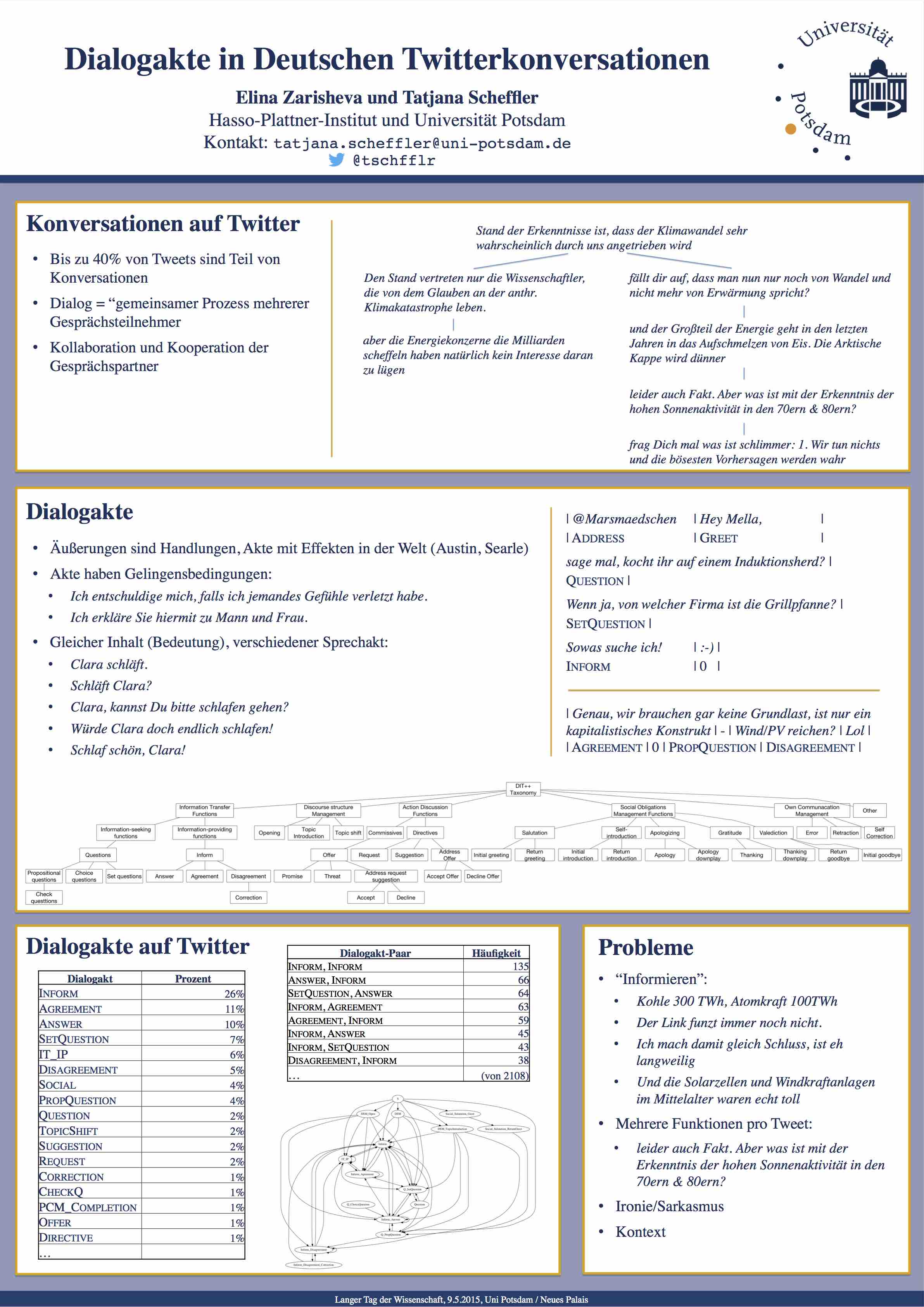

Dialogakte in deutschen Twitterkonversationen. (German) Langer Tag der Wissenschaft, May 9, 2015, Universität Potsdam |

|

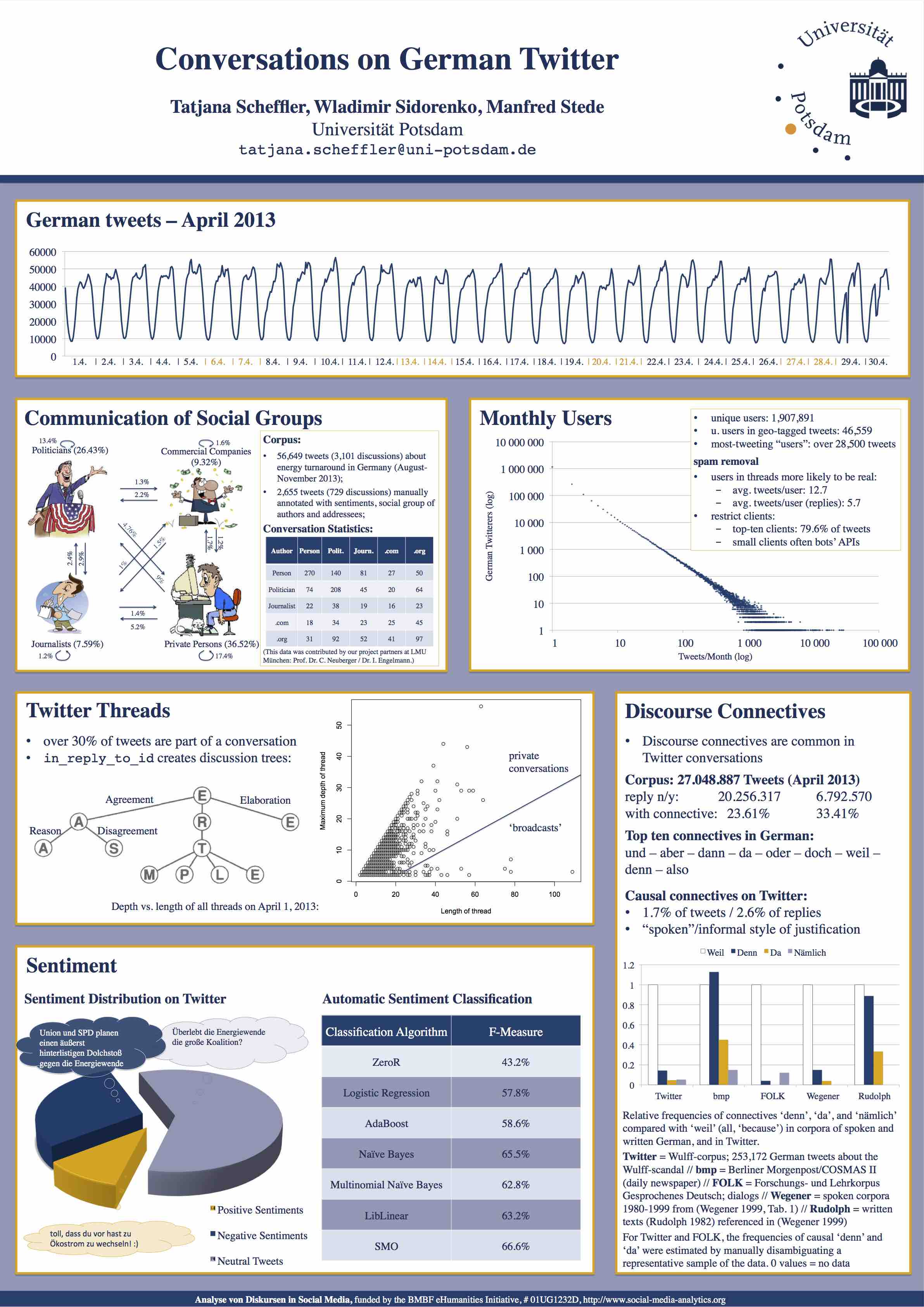

Conversations on German Twitter. Social Media Workshop, October 24, 2014, FU Berlin |

|

Introduction to Twitter data and its use for linguistic research.

Contains example for data (tweet in JSON format). (German) Gastvortrag im Seminar “Soziale Bewegungen im Internet”, Mai 2014, FU Berlin |

|

A German Twitter Snapshot. Corpus construction and analysis. (English) 9th Language Resources and Evaluation Conference (LREC), May 26-31, 2014, Reykjavik, Iceland |

|

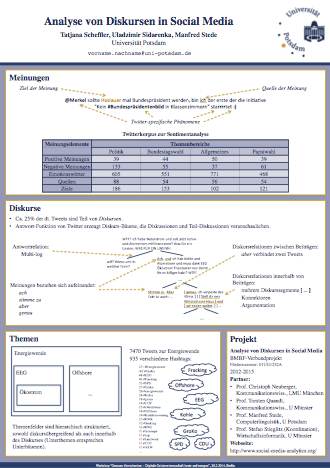

Analyse von Diskursen in Social Media. Presentation of the BMBF-subproject. (German) Workshop “Grenzen überschreiten – Digitale Geisteswissenschaft heute und morgen”, 28.2.2014, Berlin |

|

Basic statistics about German twitter data. (German) Tausend Fragen – Eine Stadt, 8.6.2013, Golm/Potsdam |

|

Erstellung eines deutschen Twitterkorpus. (German) DGfS-CL Postersession, 35. Tagung der Deutschen Gesellschaft für Sprachwissenschaft, 14.3.2013, Potsdam |

Using Twitter for (Linguistic) Research

General comments on using Twitter for linguistic research - coming soon!

Constructing a Twitter Corpus

Background

The Twitter API doesn't allow distribution of aggregated tweets (= corpora), but researchers can collect their own data. This package allows the real-time recording of a representative portion of Twitter data in a specific language.

In particular, for languages other than English, it is possible to collect a near-complete snapshot of tweets over a real-time period (without hitting API rate limits).

Some programming experience is helpful, but running the script should be doable without it if you are able to install the necessary Python packages.

How-To

In order to build your own custom Twitter corpus, in particular of all tweets in a particular language, follow the steps below:

- Install Python if not included in your operating system.

- Get the Python-package Tweepy which wraps access to the Twitter stream.

- Register as a Twitter user.

- Create a new Twitter application and receive consumer key and secret.

- After the step above, you will be redirected to your app's page. Create an access token in the "Your access token" section.

- Download the Twitter-for-Linguists script Twython and insert the consumer key and secret, and the access token key and secret in the appropriate lines.

- Create a keyword file of words which should be tracked on Twitter (up to 400 words, one per line), save it as "twython-keywords.txt" in the same directory as the script. Alternatively, you can download my German stopword list to obtain almost all German tweets (make sure to change its name or change the line in the script).

- Run the script with "python twython.py" from a console.

Additional Notes

- Twython uses Langid to filter out only German tweets. If you want another language, or want to write your own lanuage identifier, modify or delete lines 54 and 55 ('lang = langid.classify(status.text)[0];', 'if lang == "de":').

- Per default, Twython outputs one tweet per line, the complete metadata as a JSON object. The output can be modified in row 56 ('outfile.write( data + "\n" )').

- The script runs until it is aborted or Twitter closes the stream. For simple errors, the script tries to reconnect, but the script still needs monitoring to see if it got stopped.

- Each day, a new output file is started. Output files are named like tweets-2012-12-24.txt with the current date.

Log of Changes

- 18 March 2013 -- Fixed problems with non-unicode (Latin-1) encodings; Script now outputs full JSON data.

Links and Resources

- Some resources (including a "Twitter-aware tokenizer") by Christopher Potts

... please email me if you want tools included in this list.